In a world where security risks scale as quickly as the cloud itself, building a robust AWS architecture requires weaving cybersecurity into each layer of the stack. From provisioning your first EC2 instance to orchestrating containerized applications on EKS—and ensuring all traffic is encrypted by an HTTPS Load Balancer—every decision impacts your overall security posture. This article dives deep into how Identity and Access Management (IAM) and network configurations function as pillars of a secure deployment, while suggesting both AWS-native and third-party tools to help you stay ahead of emerging threats.

Estimated Reading Time: ~10 minutes

1. Context: Scenario and Requirements

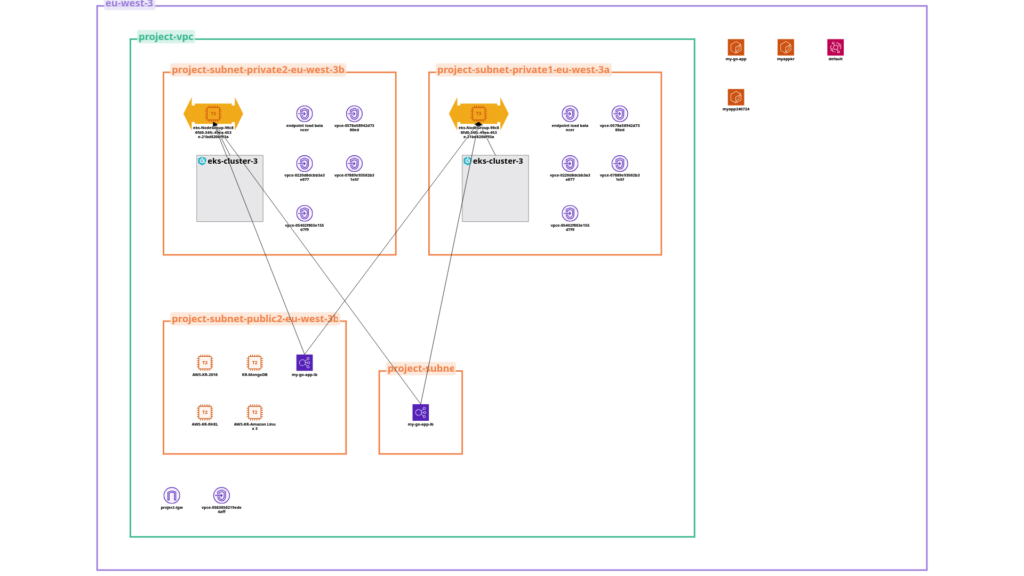

This scenario outlines deploying a database on an EC2 instance, hosting a containerized web application in an Amazon EKS cluster, and securing inbound traffic through an HTTPS Application Load Balancer. All resources need to adhere to best practices, including restricting network access, rotating credentials, and enforcing the principle of least privilege via IAM. Regular backups to Amazon S3, monitoring logs with CloudWatch, and using AWS-native or third-party cybersecurity tools (GuardDuty, WAF, Suricata, etc.) round out the requirements for a resilient, production-ready setup.

1.1 Database on EC2

- Primary Goal: Run MongoDB (or any other database) in a tightly secured environment. Restrict inbound connections to a specific CIDR range or private subnet.

- Backups & Disaster Recovery: Implement regular backups to Amazon S3, ensuring data is recoverable in the event of corruption or accidental deletion.

1.2 Web Application on EKS

- Scalability & Automation: Host containerized workloads in a Kubernetes-managed cluster (EKS), allowing rolling updates, auto-scaling, and standardized deployments.

- Secret Management: Avoid storing credentials in Docker images; use AWS Secrets Manager or Parameter Store to provide them securely at runtime.

1.3 HTTPS Load Balancer

- Secure Traffic: Enforce TLS/SSL across the board, forcing HTTP (port 80) to HTTPS (port 443) redirection.

- WAF Integration: Optionnaly deploy a Web Application Firewall (WAF) to inspect requests for malicious payloads (XSS, SQLi, etc.).

1.4 IAM (Identity & Access Management)

- Fine-Grained Permissions: Implement roles with the principle of least privilege, granting only necessary access to each AWS service or component.

- Traceability: Use CloudTrail and Security Hub to audit changes and detect potential misconfigurations.

2. Deploying and Securing the Database on EC2

2.1 EC2 Instance Configuration

- OS and AMI Choice

- Select Ubuntu 22.04 LTS (or another distribution with robust, frequent security updates).

- Optionally enable EBS encryption at rest for the root and data volumes.

- Security Groups

- Restrict port 27017 (MongoDB) to internal traffic. If you must expose it publicly (for remote admin tasks), whitelist only trusted IP ranges or set up a VPN/bastion approach.

- Limit SSH (port 22) to a bastion host or your office’s IP range. For ephemeral remote access, consider AWS Systems Manager Session Manager to avoid exposing port 22 entirely.

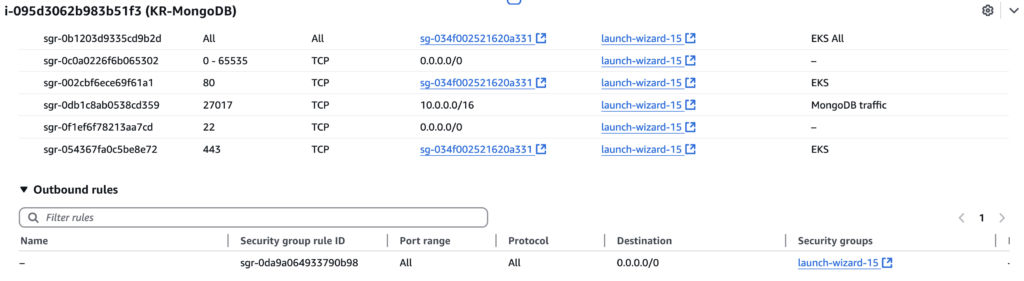

As shown in the security group rules for i-095d3062b983b51f3 (KR-MongoDB), there are a few common misconfigurations to be wary of. First, inbound “All Traffic” from the EKS group and wide-open ports (0–65535 from 0.0.0.0/0) greatly expand the attack surface, exposing numerous unused ports to the internet. Similarly, leaving SSH (port 22) publicly accessible means potential brute-force attempts. Although this setup might work for quick tests, it’s risky in a production or publicly accessible environment. Instead, restrict traffic to the necessary ports—ideally behind a bastion or an ALB for inbound requests—and limit SSH or ephemeral ports to known IPs or private subnets.

- Extra Hardening

- Disable password login; rely exclusively on key-based SSH.

- Keep the system patched.

2.2 MongoDB Configuration

- Access Control

- Enable auth in

/etc/mongod.confby settingsecurity.authorization: enabled. - Create role-specific users: for instance, an

appUserwithreadWriteon a single database, and an admin user with broader privileges. - Store credentials securely (e.g., in AWS Secrets Manager).

- Enable auth in

- Transport Layer Security

- If external services need to connect to MongoDB, consider generating a TLS certificate for secured connections.

- For internal usage, at least ensure you’re restricting MongoDB to the private subnet.

- Regular Backups

- Script example (

mongodb_backup.sh):#!/bin/bash TIMESTAMP=$(date +%Y-%m-%d_%H-%M-%S) mongodump --host localhost --port 27017 --username "appUser" \ --password "securePassword" --authenticationDatabase "admin" \ --out "/backup/$TIMESTAMP" aws s3 sync "/backup/$TIMESTAMP" "s3://my-backup-bucket/$TIMESTAMP" - Integrate with

cronfor daily or weekly backups, then rotate old backups (e.g., older than 14 days).

- Script example (

2.3 Logs & Monitoring

- AWS CloudWatch Logs

- Stream MongoDB logs to CloudWatch Logs using either an agent (e.g., the

awslogsdriver) or a sidecar approach in Docker if containerized. - Set alarms on high error rates or repeated authentication failures.

- Stream MongoDB logs to CloudWatch Logs using either an agent (e.g., the

- AWS CloudTrail

- Every change in security group rules, IAM policy updates, or new resource creation is logged here.

- Consolidate logs from multiple regions or accounts to avoid missing critical events.

While hosting MongoDB on an EC2 instance is a valid approach—especially if you need granular control—AWS offers several managed solutions that can reduce operational overhead and enhance reliability. For instance, Amazon DocumentDB (with MongoDB compatibility) offloads patching, scaling, and automatic backups, and integrates natively with other AWS services. Alternatively, using a fully managed database service like Amazon RDS or Amazon Aurora might be more cost-effective and less maintenance-intensive if a relational model suits your use case. Evaluating these managed options not only saves you from manual updates and security patches, but also ensures high availability and automatic failover, letting you focus on application development rather than database administration.

Recommended Cybersecurity Tools

- AWS Security Hub

- Regularly checks resources against CIS and PCI DSS benchmarks, highlighting misconfigurations.

- Non-AWS Alternative: Wazuh (an OSSEC fork) or Elastic Security for compliance scanning and SIEM capabilities.

- Amazon GuardDuty

- Monitors VPC Flow Logs and DNS queries to detect intrusion attempts or suspicious activity.

- Non-AWS Alternative: Suricata or Zeek for deep packet inspection and intrusion detection.

- Amazon Detective

- Correlates GuardDuty findings with CloudTrail, VPC logs for deeper incident investigations.

- Non-AWS Alternative: Tools like Arkime or Malcolm for a self-managed network forensics platform.

3. Exposing the Web Application on EKS

3.1 EKS Cluster Setup

- Networking

- Separate subnets:

publicsubnets for load balancers,privatesubnets for worker nodes. - If using AWS Fargate for serverless Kubernetes pods, define appropriate Fargate profiles.

- Separate subnets:

- RBAC & IAM Roles

- Create a dedicated IAM role for the control plane, another for node instances.

- Map these roles to Kubernetes identities using

aws-authConfigMap, ensuring each node or user gets only the necessary privileges.

- Autoscaling & Resilience

- Enable Cluster Autoscaler to handle node scaling based on real-time workload.

- Use Horizontal Pod Autoscaler to dynamically adjust pod replicas under CPU/memory load.

3.2 Containerization

- Dockerfile Hardening

- Base images: prefer minimal distributions (e.g.,

alpine), keep them updated. - Multi-stage builds: remove development tools or secrets from the final image to reduce the attack surface.

- Base images: prefer minimal distributions (e.g.,

- Amazon ECR

- Encrypt images at rest by default.

- Use repository policies to limit push/pull actions to certain IAM entities.

- Application Secrets

- AWS Secrets Manager or Parameter Store integrates with EKS to inject secrets as environment variables or volumes.

- Avoid storing credentials in plain text within manifests or environment variables in Git.

3.3 Kubernetes Policies & Observability

- Network Policies

- Block cross-namespace traffic by default, then allow communication only where required.

- Tools like Calico or Cilium can implement advanced eBPF-level filtering, providing layer 7 controls.

- Audit Logging

- Configure Kubernetes audit logs (api-server flags) for deeper visibility into cluster-level changes (e.g., new roles, events).

- Combine them with CloudWatch or an external SIEM (Splunk, Graylog).

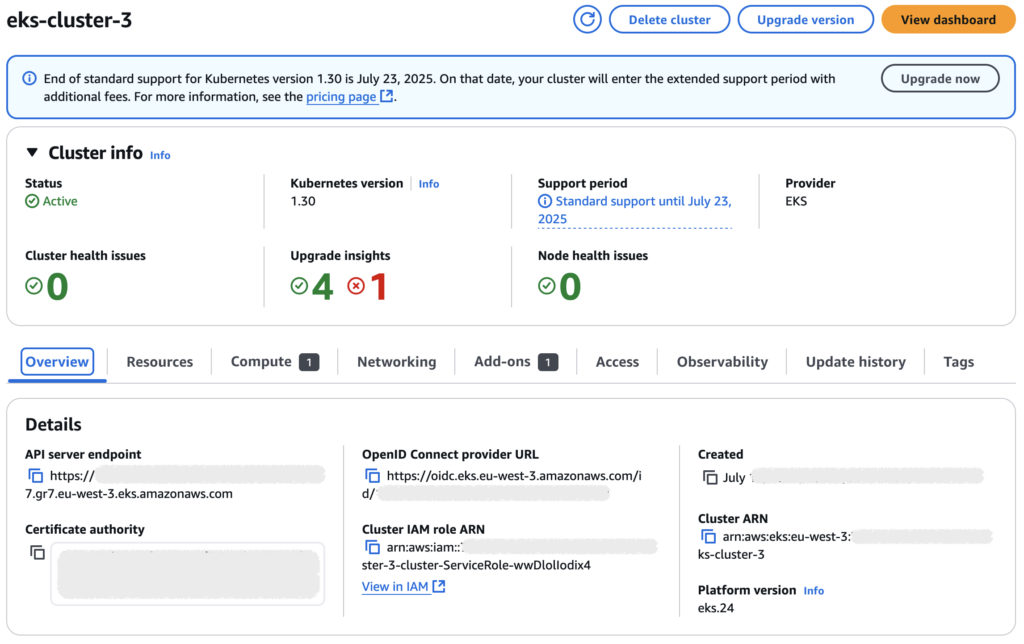

This screenshot displays critical details of an Amazon EKS cluster named eks-cluster-3, including its status (Active), the Kubernetes version in use (1.30), and the API server endpoint. It also shows the cluster’s IAM role ARN, the certificate authority data (Base64-encoded), and the OIDC provider URL used for authenticating requests. These elements are essential for cluster operations but should typically be redacted if shared publicly to avoid exposing unique identifiers or endpoints.

Recommended Cybersecurity Tools

- Amazon Inspector

- Scans containers for known CVEs or misconfigurations.

- Non-AWS Alternatives: Trivy or Clair for open-source scanning, integrated into CI/CD pipelines.

- OPA/Gatekeeper

- Enforces policies like “no container runs as root” or “only signed images are deployed.”

- Alternatives: Kyverno, Kubewarden for policy-as-code approaches.

- Kubernetes Network Policies

- Minimizes lateral movement in case one pod is compromised.

- Enhanced Tools: Istio or Linkerd for mTLS between services, providing zero-trust networking.

4. Load Balancing and Traffic Encryption

4.1 Application Load Balancer (ALB)

- Annotations in the Service Manifest

- Example snippet (

service.yaml):apiVersion: v1 kind: Service metadata: name: my-webapp-service annotations: service.beta.kubernetes.io/aws-load-balancer-type: "alb" alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:region:account:certificate/1234-5678" spec: type: LoadBalancer ports: - port: 80 targetPort: 8080 selector: app: my-webapp - This config ensures an ALB is created, uses a specified SSL certificate (ACM), and routes traffic to pods on port 8080.

- Example snippet (

- Certificate Management

- Use AWS Certificate Manager (ACM) to provision/renew certificates automatically.

- For multi-domain or wildcard certs, validate ownership via DNS or email.

- Health Checks & Redirection

- Configure HTTP → HTTPS redirection at the ALB level, forcing secure connections.

- Health checks set to a custom path (e.g.,

/health) with an HTTP 200 OK response.

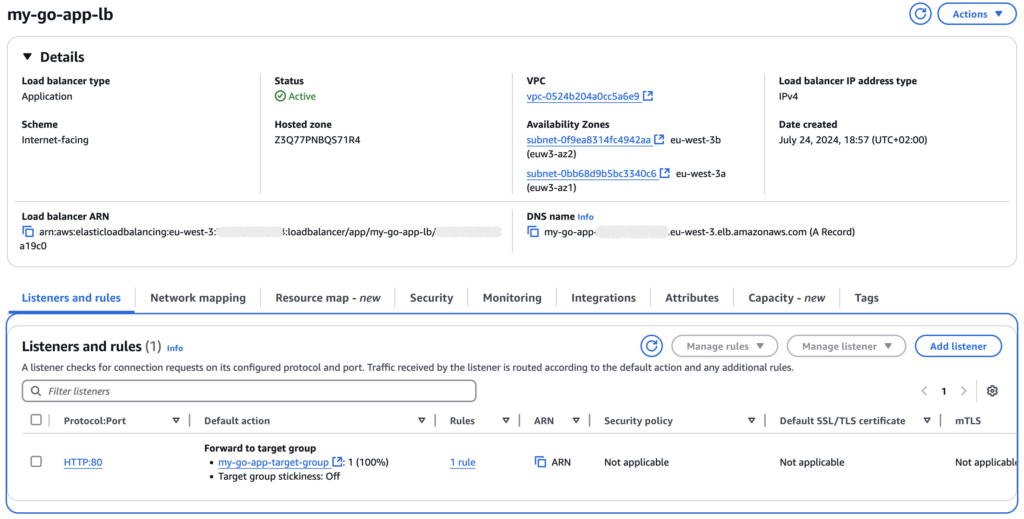

This screenshot provides essential information about an Application Load Balancer (ALB) named my-go-app-lb, including its type (Internet-facing), the AWS region and subnets it spans (eu-west-3a/b), and the DNS name used to route traffic. You can also see the listener on port 80 forwarding requests to a target group (my-go-app-target-group), where stickiness is disabled. The ARN references, hosted zone, and creation date supply further context on the load balancer’s operational details, though any sensitive identifiers—like ARN or DNS name—are best redacted or partially hidden when sharing publicly for security.

4.2 Security Group Rules

- Inbound

- Permit port 443 from the Internet, optionally allow port 80 only for an immediate redirect.

- If internal-only, set the load balancer as an “internal ALB” in the scheme annotation.

- Outbound

- Let the ALB connect to EKS nodes on the required node port or cluster IP.

- If pods require external calls (e.g., to third-party APIs), confirm those egress rules are tightly scoped.

- Additional Hardening

- For advanced setups, combine the ALB with AWS WAF to filter malicious payloads.

- Export ALB logs to S3 or a SIEM platform and analyze patterns in real time.

Recommended Cybersecurity Tools

- AWS WAF

- Define rules to detect/block common attacks (SQL injection, XSS).

- Non-AWS: ModSecurity (as a WAF engine with Apache/nginx) or Cloudflare WAF for broader multi-cloud or edge coverage.

- AWS Firewall Manager

- Centralize WAF or Shield Advanced policies across multiple accounts.

- Alternatives: Imperva Cloud WAF, Akamai for distributed security enforcement.

- AWS Shield Advanced

- Enhanced DDoS mitigation for ALB, CloudFront, and Route 53.

- Non-AWS: Cloudflare DDoS Protection, Radware for enterprise-level DDoS defense.

5. Main Challenges: IAM and Load Balancing

5.1 IAM (Identity & Access Management)

- Granular Policies

- Use JSON-based IAM policies specifying allowed actions, resources, and conditions.

- Tools like IAM Access Analyzer help find roles granting external or overly broad access.

- Key & Credential Rotation

- Rotate IAM user or service account credentials regularly.

- Link rotation events to CloudWatch Events or an SNS topic for alerts.

- Auditing & Compliance

- AWS Config: Check if an S3 bucket has public read access or an EC2 instance has an open SSH port.

- IAM Identity Center (AWS SSO): Centralize employee access; tie user provisioning/deprovisioning to corporate directories.

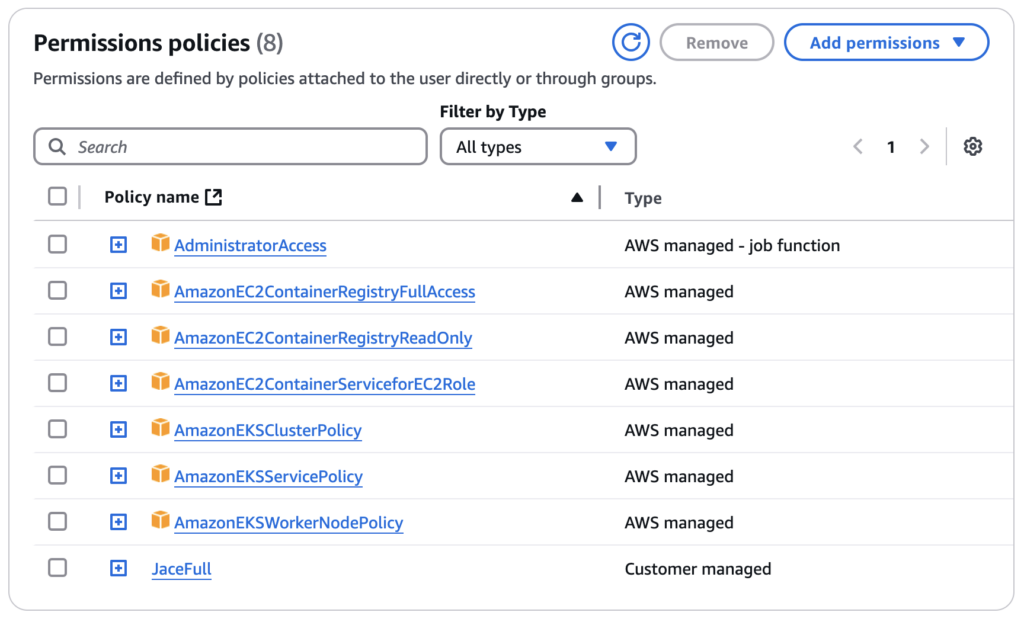

Granting AdministratorAccess plus multiple AWS-managed policies (like ECR Full, EKS policies, and a custom “Full” policy) to the same user significantly exceeds least-privilege principles, especially if those policies overlap. While convenient, this approach can create redundancies (e.g., multiple “Full” grants) and expand the potential attack surface if the user account is ever compromised. Instead, attach only the specific permissions each user or role requires, use separate roles for cluster services and worker nodes, and review custom policies (like “JaceFull”) to ensure they don’t unnecessarily duplicate admin-level access.

Additional Tools for IAM

- Okta / Auth0 / Keycloak: For single sign-on and identity federation in multi-cloud or hybrid environments.

- HashiCorp Sentinel / Open Policy Agent: Extend policy-as-code to IAM, verifying each resource meets security constraints (e.g., no wildcards in roles).

5.2 Load Balancing

- HTTPS Enforcement

- Deny all HTTP if you do not plan to redirect. Otherwise, a 301/302 redirect at the ALB ensures secure connections.

- Keep an eye on the certificate’s expiration date (ACM auto-renew should handle it, but watch for domain or DNS changes).

- Scaling & Performance

- If traffic surges, confirm your ALB scales out quickly.

- Monitor latency metrics in CloudWatch (e.g.,

TargetResponseTime) to detect configuration issues or health check failures.

- Logging & Analytics

- ALB access logs can be sent to S3, then queried via Amazon Athena or a self-hosted solution (e.g., ELK Stack).

- Look for repeated 4xx or 5xx errors, spikes in request volume, or suspicious paths that might indicate reconnaissance.

Securing AWS environments means meticulously orchestrating defenses across EC2 (for your database), EKS (for containerized applications), and an HTTPS Load Balancer (for encrypted traffic and potential WAF integration). The pivotal elements—IAM and load balancing—demand special care. IAM roles must be strictly scoped, adopting a least-privilege approach, and regularly audited via AWS Security Hub or external tooling (Wazuh, Splunk). Meanwhile, your Load Balancer emerges as both an entry point for encrypted traffic and a platform for advanced protection (e.g., WAF, custom rules, DDoS mitigation).

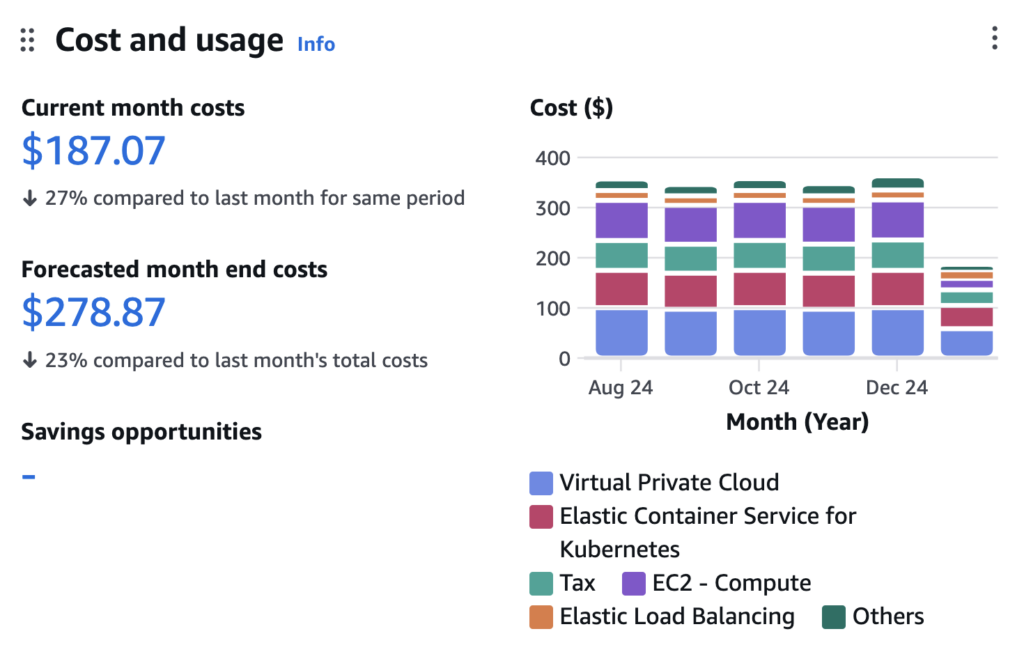

While robust security and scalability are paramount, it’s equally important to track the expenses tied to your AWS resources—especially as you spin up EC2 instances, run EKS clusters, or deploy additional services. Tools like AWS Cost Explorer and AWS Budgets enable you to monitor usage patterns, set spending thresholds, and receive alerts before costs escalate unexpectedly. If you prefer more in-depth analysis or multi-cloud coverage, consider third-party solutions such as CloudHealth or CloudZero. By actively reviewing these metrics and adjusting resource allocations, you ensure a sustainable balance between security, performance, and budget.

Whether you rely solely on AWS-native services (GuardDuty, Inspector, Shield) or combine them with third-party or open-source alternatives (ModSecurity, Cloudflare, Suricata, etc.), your end goal remains the same: a consistently updated, closely monitored, and securely configured environment. As threat actors evolve their tactics, continuous improvement—keeping OS patches current, rotating credentials, refining network policies—is crucial. By treating security as a core design principle rather than an afterthought, you significantly boost the resilience of your cloud deployments while safeguarding your digital frontier.

Author Bio

Krzysztof Raczynski is a cybersecurity professional committed to sharing hands-on insights drawn from his work securing complex AWS infrastructures. He thrives on designing robust solutions around containers, threat detection, and cloud-native best practices. Outside of day-to-day engineering, Krzysztof invests in knowledge-sharing—crafting thorough tutorials, speaking at industry meetups, and championing forward-thinking approaches to modern cyber defense.